I was reading about logging in ASP.NET when I came across this statement about logging to the database:

When logging to SQL Server, don’t do so directly. Instead, add log messages to an in-memory queue and have a background worker dequeue and insert data to SQL Server.

Paraphrased from Microsoft – No asynchronous logger methods

In this article, I’ll show how to implement this background database logger idea. First, I’ll start by showing the design, and then I’ll show the code.

Table of Contents

Design

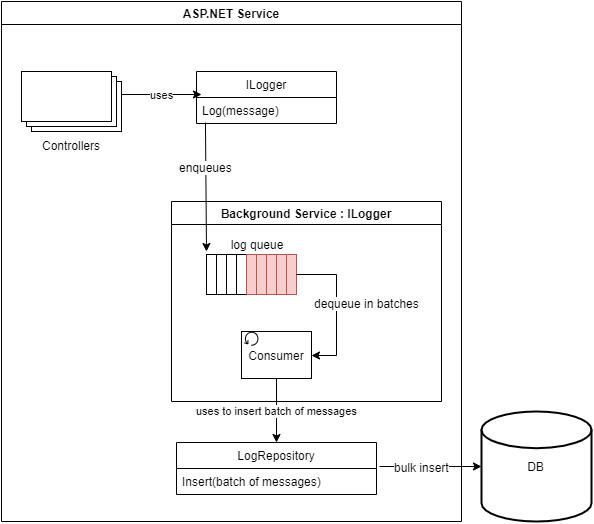

We can implement Microsoft’s background database logger idea by using the consumer/producer pattern with a background service. To make sure this scales well, we’ll bulk insert log messages into the database.

I’ll go into details about this design, but first here’s the design diagram:

With the consumer/producer pattern, you have one or more producers enqueuing messages to a shared queue. You have one or more consumers dequeuing messages from the shared queue and processing them. In our case, we’ll have multiple producers (anything that logs, mostly controllers), and a single consumer.

In ASP.NET, you can add hosted services that run in the background. These are referred to as background services. We’ll use a background service for two purposes: it’ll contain the shared queue, and it’ll act as the consumer.

The producers only need to be exposed to a Log() method. They don’t need to know they’re using a background service, or that it’s logging to the database. This is why we’re using the ILoggerService interface.

Since there can be multiple producers, there can be multiple log messages coming in at the same time. Executing lots of individual INSERT statements can degrade system performance. Instead, we’ll bulk insert the messages to the database. In order to bulk insert, the consumer will need to be able to batch read from the queue.

Code

In this section, I’ll show the code for the design shown above. I’ll be building this from the outside-in, and building the background service last.

Throughout this code, I’ll be using Console.WriteLine(). I’m running the service with a console interface so I can easily see what’s going on.

ILoggerService and a controller that uses it

First things first, we need to add ILoggerService. It’s best practice to code against interfaces instead of implementations. The producers only need to have access to the Log() method. They don’t need to know anything about the concrete implementation.

public interface ILoggerService

{

void Log(LogLevel logLevel, string message);

}

Code language: C# (cs)Note: I’m not using the built-in ILogger. It has a huge amount of methods, and I didn’t want to have to implement them in the background service.

Here’s an example of a controller that logs messages. It needs ILoggerService dependency injected.

[Route("[controller]")]

[ApiController]

public class RecipesController : ControllerBase

{

private readonly ILoggerService Logger;

public RecipesController(ILoggerService logger)

{

Logger = logger;

}

[HttpGet("{id}")]

public string Get(int id)

{

Logger.Log(LogLevel.Debug, $"GET /Recipes/{id}");

return "recipe";

}

}

Code language: C# (cs)Log repository for bulk inserting

We want to bulk insert the log messages. Any time you’re interacting with a database, it’s a good idea to implement the repository pattern. With this pattern, you encapsulate the database interaction logic in a repository class.

First, we need to add the LogMessage model class:

public class LogMessage

{

public int ThreadId { get; set; }

public string Message { get; set; }

public DateTimeOffset Timestamp { get; set; }

}

Code language: C# (cs)Next, since we want to use SqlBulkCopy, and we’re using the latest .NET (not .NET Framework), we’ll need to install the System.Data.SqlClient package. Do that by executing the following command (Note: this is using View > Other Windows > Package Manager Console):

Install-Package System.Data.SqlClient

Code language: PowerShell (powershell)Now we can implement the LogRepository class. It will do a bulk insertion using SqlBulkCopy.

Whenever you’re interacting with an external dependency, such as a database, it’s a good idea to make it fault tolerant and retry when transient errors happen. In this case, we’ll try to make this resilient by catching a few transient SQL exceptions and retrying the bulk insertion a few times.

Here’s the LogRepository class:

using System.Data;

using System.Data.SqlClient;

public class LogRepository : ILogRepository

{

private const string TABLE = "Log";

private readonly string ConnectionString;

private readonly HashSet<int> transientSqlErrors = new HashSet<int>()

{

-2, 258, 4060

};

private const int MAX_RETRIES = 3;

private const int RETRY_SECONDS = 5;

public LogRepository(string connectionString)

{

ConnectionString = connectionString;

}

public async Task Insert(List<LogMessage> logMessages)

{

DataTable table = new DataTable();

table.TableName = TABLE;

table.Columns.Add(nameof(LogMessage.Timestamp), typeof(DateTimeOffset));

table.Columns.Add(nameof(LogMessage.Message), typeof(string));

table.Columns.Add(nameof(LogMessage.ThreadId), typeof(int));

foreach (var logMessage in logMessages)

{

var row = table.NewRow();

row[nameof(LogMessage.Timestamp)] = logMessage.Timestamp;

row[nameof(LogMessage.Message)] = logMessage.Message ?? (object)DBNull.Value;

row[nameof(LogMessage.ThreadId)] = logMessage.ThreadId;

table.Rows.Add(row);

}

await BulkInsertWithRetries(table);

}

private async Task BulkInsertWithRetries(DataTable table)

{

int attempts = 1;

while (true)

{

try

{

using (var sqlBulkCopy = new SqlBulkCopy(ConnectionString))

{

sqlBulkCopy.DestinationTableName = table.TableName;

await sqlBulkCopy.WriteToServerAsync(table);

return;

}

}

catch (SqlException sqlEx)

when (transientSqlErrors.Contains(sqlEx.Number) && attempts <= MAX_RETRIES)

{

Console.WriteLine($"Transient SQL error. Retrying in {RETRY_SECONDS} seconds");

await Task.Delay(TimeSpan.FromSeconds(RETRY_SECONDS));

attempts++;

}

}

}

}

Code language: C# (cs)Note: We could use a table-valued parameter (TVP) approach in this scenario instead of doing a bulk insert. The main goal here is to do a set-based approach so we’re not spamming the system with lots of individual inserts. Both approaches (TVP and bulk insert) work fine for this.

Background service with a log queue

Finally we can add the background service class and call it DatabaseLoggerService. To make this run as a background service, we have to implement BackgroundService.

We’ll implement the consumer/producer pattern by adding an async queue from System.Threading.Channels. The ILoggerService.Log() method will allow producers to enqueue log messages. We’ll implement the consumer loop in ExecuteAsync() (a method from BackgroundService).

Here’s the DatabaseLoggerService class:

using Microsoft.Extensions.Hosting;

using Microsoft.Extensions.Logging;

using System.Threading.Channels;

public class DatabaseLoggerService : BackgroundService, ILoggerService

{

private readonly Channel<LogMessage> logMessageQueue;

private readonly IHostApplicationLifetime HostApplicationLifetime;

private const int MAX_BATCH_SIZE = 10;

private readonly ILogRepository LogRepository;

public DatabaseLoggerService(ILogRepository logRepository, IHostApplicationLifetime hostApplicationLifetime)

{

logMessageQueue = Channel.CreateUnbounded<LogMessage>();

LogRepository = logRepository;

HostApplicationLifetime = hostApplicationLifetime;

}

public async override Task StopAsync(CancellationToken cancellationToken)

{

await base.StopAsync(cancellationToken);

}

protected async override Task ExecuteAsync(CancellationToken stoppingToken)

{

while(!stoppingToken.IsCancellationRequested)

{

try

{

Console.WriteLine("Waiting for log messages");

var batch = await GetBatch(stoppingToken);

Console.WriteLine($"Got a batch with {batch.Count}(s) log messages. Bulk inserting them now.");

//Let non-retryable errors from this bubble up and crash the service

await LogRepository.Insert(batch);

}

catch (TaskCanceledException)

{

Console.WriteLine("Stopping token was canceled, which means the service is shutting down.");

return;

}

catch (Exception ex)

{

Console.WriteLine($"Fatal exception in database logger. Crashing service. Error={ex}");

HostApplicationLifetime.StopApplication();

return;

}

}

}

public void Log(LogLevel logLevel, string message)

{

//The reason to use Writer.TryWrite() is because it's synchronous.

//We want the logging to be as fast as possible for the client, so

//we don't want the overhead of async

//Note: We're using an unbounded Channel, so TryWrite() *should* only fail

//if we call writer.Complete().

//Guard against it anyway

var logMessage = new LogMessage()

{

Message = message,

ThreadId = System.Threading.Thread.CurrentThread.ManagedThreadId,

Timestamp = DateTimeOffset.Now

};

if (!logMessageQueue.Writer.TryWrite(logMessage))

{

throw new InvalidOperationException("Failed to write the log message");

}

}

private async Task<List<LogMessage>> GetBatch(CancellationToken cancellationToken)

{

await logMessageQueue.Reader.WaitToReadAsync(cancellationToken);

var batch = new List<LogMessage>();

while (batch.Count < MAX_BATCH_SIZE && logMessageQueue.Reader.TryRead(out LogMessage message))

{

batch.Add(message);

}

return batch;

}

}

Code language: C# (cs)The producers will call Log() synchronously. This is fast because all it’s doing is enqueuing the message.

The consumer loop reads a batch of messages from the queue and then awaits the bulk insertion. I wouldn’t suggest fire and forgetting the bulk insertion. For one, waiting for it to complete acts as a throttling mechanism. You’re only ever doing one bulk insertion at once. Second, it simplifies error handling.

Notice that this is is calling StopApplication() if an exception bubbles up from LogRepository. As noted in the LogRepository section, it’s retrying a few times if there are transient SQL exceptions. For any other type of error, or if it exceeds the max retries, it’ll throw. This will trigger a graceful shutdown of the entire service, not just crash the background service. There are definitely other possible ways to handle this (such as logging to a fallback file). I decided to do the simplest approach with the assumption that this logging is critical and the service should stop if it fails to log.

Registering the services

To register DatabaseLoggerService, use both of these:

- AddSingleton() – So it can be dependency injected into the controllers.

- AddHostedService() – Because it’s a hosted background service.

Here’s an example:

//rest of adding services

builder.Services.AddSingleton<ILogRepository, LogRepository>(_

=> new LogRepository(Configuration.GetConnectionString("Default")));

builder.Services.AddSingleton<ILoggerService, DatabaseLoggerService>();

builder.Services.AddHostedService(sp => sp.GetService<ILoggerService>() as DatabaseLoggerService);

//Rest of init code

Code language: C# (cs)Note: Before .NET 6, do this in Startup.ConfigureServices().

Default connection string in appsettings.json

We can add a placeholder for the connection string in appsettings.json, and then add the real connection string as a user secret.

{

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft": "Warning",

"Microsoft.Hosting.Lifetime": "Information"

}

},

"AllowedHosts": "*",

"ConnectionStrings": {

"Default": "The connection string is defined in the user secrets file"

}

}

Code language: JSON / JSON with Comments (json)Run it

To test this out and see it in action, run the ASP.NET service and send concurrent requests with HttpClient. Check the Log table in the database to verify that it inserted the messages.

To see the bulk insertions work, use the following test client that sends tons of concurrent requests:

var httpClient = new HttpClient();

var go = new ManualResetEventSlim();

for (int i = 1; i <= 77; i++)

{

var num = i; //capture for closure

Task.Run(async () =>

{

Console.WriteLine($"Num {num} waiting");

go.Wait();

Console.WriteLine($"Num {num} going");

var response = await httpClient.GetAsync($"https://localhost:12345/Recipes/{num}");

response.EnsureSuccessStatusCode();

Console.WriteLine($"Num {num} done");

});

}

go.Set();

Console.ReadLine();

Code language: C# (cs)Note: I tried sending concurrent requests with Postman, but it was too slow.

In addition to seeing the bulk insertions working, you can induce a transient SQL exception to see it do retries. The simplest way to do that is to set the database offline manually, wait for it to report the transient error, and then set the database back online manually.

Here’s an example of what it looks like when you run this:

CommandLoop starting

Waiting for log messages

Got a batch with 7(s) log messages. Bulk inserting them now.

Transient SQL error. Retrying in 5 seconds

Waiting for log messages

Got a batch with 10(s) log messages. Bulk inserting them now.

Waiting for log messages

Got a batch with 10(s) log messages. Bulk inserting them now.

Waiting for log messages

Got a batch with 10(s) log messages. Bulk inserting them now.

Waiting for log messages

Got a batch with 10(s) log messages. Bulk inserting them now.

Waiting for log messages

Got a batch with 10(s) log messages. Bulk inserting them now.

Waiting for log messages

Got a batch with 10(s) log messages. Bulk inserting them now.

Waiting for log messages

Got a batch with 10(s) log messages. Bulk inserting them now.

Waiting for log messagesCode language: plaintext (plaintext)The consumer reads between 1-10 messages from the queue. If there are 10 messages available, it’ll read all 10. Otherwise, it will read as many as it can. In the first instance, notice that it only read 7 messages. That’s because there were only 7 messages available on the queue at the time. After that, it was able to read 10 messages every time.

Also notice that it detected the transient SQL error, waited 5 seconds, and tried again. It succeeded when it retried.

Source code

The full source code for the background database logger shown in this article is available here: https://github.com/makolyte/aspdotnet-background-dblogger

Thanks! I come to this “problem” today (‘When logging to SQL Server, don’t do so directly. ‘), and I was trying to do what you do!, very useful

You’re welcome, Johnatan. I’m glad this helped.

Thanks for the detailed article. It helped me understand so many concepts on different levels. Have few follow-up questions though.

What would happen if the main thread ends before background worker processed all the messages in queue? Do we lose those messages?

If somebody sends a cancel request, how do we ensure all the messages that are in queue sent to the database before the While loop on line 23 in DatabaseLoggerService exits? There could be another batch of messages in the queue and while loop could exit because of the cancellation request sent by main thread by calling dispose() or when it comes to the end of application.

Also, with an in-memory queue, will the order of the log messages preserved?

Thank you in advance so much!

Hi Raj,

I’m glad this helped you. I’ll answer your questions below.

Question 1 – “What would happen if the main thread ends before background worker processed all the messages in queue?”

When the process starts a graceful shutdown, it calls the background service’s StopAsync(). This gives you a chance to do a graceful shutdown. In this case, you can process ALL remaining messages on the queue and then stop the ExecuteAsync() loop.

a. By default, you have 5 seconds to do a graceful shutdown. Extend this by adding this to the initialization logic (configure however much time you want):

services.Configure<HostOptions>(opts => opts.ShutdownTimeout = TimeSpan.FromMinutes(1));

b. In StopAsync(), here’s an example of how you process ALL remaining messages and insert them (instead of processing them in batches)

public async override Task StopAsync(CancellationToken cancellationToken)

{

try

{

//Stops new messages from being enqueued. Any attempts will results in InvalidOperationException

//Assume no new logging will happen after graceful shutdown is started

logMessageQueue.Writer.Complete();

//Get all remaining log messages from the queue and bulk insert them all

var allRemainingLogMessages = new List<LogMessage>();

while (logMessageQueue.Reader.TryRead(out LogMessage item))

{

allRemainingLogMessages.Add(item);

}

Console.WriteLine("Inserting all remaining log messages");

await LogRepository.Insert(allRemainingLogMessages);

}

finally

{

//Cancels stoppingToken (so the ExecuteAsync() loop will stop after in-progress work is completed)

await base.StopAsync(cancellationToken);

}

}

When base.StopAsync() is called, it cancels the stoppingToken, which stops the ExecuteAsync() loop. If there’s any in-progress batch being processed, it will complete (stoppingToken is only used when waiting for new items on the queue, and after a batch is processed) and then exit the loop.

Question 2 – “Do we lose those messages?”

According to Microsoft documentation, it’s possible for the process to be shutdown unexpectedly and not do a graceful shutdown. In that case, you’d definitely lose in-progress work (the log messages).

Question 3 – “With an in-memory queue, will the order of the log messages preserved?”

No, but that’s what the log timestamp is for. You can order messages by the timestamp.

Since there are multiple producers trying to write log messages concurrently, the order that messages actually get enqueued isn’t guarenteed. The messages are timestamped BEFORE getting enqueued though, and that happens in the producer thread when it calls LoggerService.Log(). That means the times will be correct.

The consumer processes messages (inserts them into the database) in queue order, but you’re probably not referring to that.

Thank you, Mak for your prompt and detailed response.

I assume this background service can be put in a library and distribute as nuget pacakge so that multiple applications (ASP .NET, .Net 6) can use this library to log messages to a centralized location.

You’re welcome! Yes, this could be generalized and put into a reusable library.

Do you think using background service should be a go-to approach to deal with this pattern?

I can also use a background thread(Task.Run(ProcessLogMessage)) to process the log messages from queue.

What are your thoughts on both the approaches? which one do you prefer?

void ProcessLogMessages()

{

///retrieve data from queue and send it to destination

}

Yes, I believe the background service is the way to go. There’s one queue consumer and it waits for work in a non-blocking manner.

Let’s discuss the other approach you mentioned. First, I’m assuming there’s an in-memory queue that everything has access to. My main question is when and where are you calling Task.Run(ProcessLogMessage)? Are you going to allow multiple queue consumers to run at once, and how many? Worst case, it’s uncontrolled, and you end up with N queue consumers all processing 1 messages at once. That eliminates the benefit of batching up the messages and doing bulk insertions. It’s practically the same as executing N number of insertions – which quickly degrades performance. On the other hand, let’s say you only want to allow one queue consumer at a time. Worst case, you have N number of fire-and-forget tasks all waiting at a lock. Those threads are being wasted.

With the background service, no threads are being wasted. It’s doing a non-blocking wait for work. Furthermore, it maximizes the benefits of doing bulk insertions (stated differently, it minimizes the degrading effects of doing many individual inserts).

Thank you again for the details.

Yes, the idea is to create a library with a custom logging service. This service holds the logic of in-memory queue creation and kicking of background thread. Applications consuming the library will have the ability to register the logging service into the DI container during the start up process. At the time of registration, we will kick-off the background thread that will look for the messages in the queue. Before the main thread finishes, we will call .wait() on the background which is a blocking call(i know it is not good to use) so that all the remaining messages in the queue are processed. Could not think of better way to handle – “what if the main thread ends before background thread processed all the messages in queue” scenario.

Please let me know your feedback on this approach. I agree your solution is much cleaner one.

Thanks again!

Hey Raj,

I like your idea for putting this in a library.

Regarding controlling the shutdown, you can do what you’re saying from within the background service itself. Override StopAsync() and finish the work (see code in one my replies above). I’d suggest doing it through this supported approach to be on the safe side. In a previous reply, I mentioned that you have a set amount of time to perform the shutdown, but that was old behavior that’s changed now, and you have total control over the shutdown now. I looked deeper into this and wrote about it here: Control the graceful shutdown time for background services.

By the way, if you’re interested, I could review your code when you build this (consulting). I have your email in the comments, so if you want, I can reach out to you.