The HttpClient class was designed to be used concurrently. It’s thread-safe and can handle multiple requests. You can fire off multiple requests from the same thread and await all of the responses, or fire off requests from multiple threads. No matter what the scenario, HttpClient was built to handle concurrent requests.

To use HttpClient effectively for concurrent requests, there are a few guidelines:

- Use a single instance of HttpClient.

- Define the max concurrent requests per server.

- Avoid port exhaustion – Don’t use HttpClient as a request queue.

- Only use DefaultRequestHeaders for headers that don’t change.

In this article I’ll explain these guidelines and then show an example of using HttpClient while applying these guidelines.

Table of Contents

Use a single instance of HttpClient

HttpClient was designed for concurrency. It was meant for the user to only need a single instance to make multiple requests. It reuses sockets for subsequent requests to the same URL instead of allocating a new socket each time.

HttpClient implements IDisposable, which leads developers to think it needs to be disposed after every request, and therefore use it incorrectly like this:

//Don't do this

using(HttpClient http = new HttpClient())

{

var response = await http.GetAsync(url);

//check status, return content

}

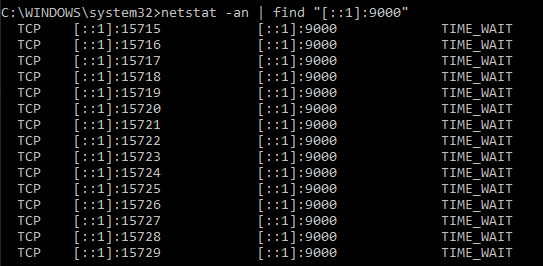

Code language: C# (cs)To show the problem with this, here’s what happens when I fire off 15 requests using new instances of HttpClient for each request:

It allocated 15 sockets – one for each request. Because HttpClient was disposed, the allocated socket won’t be used again (until the system eventually closes it). This is not only a waste of resources, but can also lead to port exhaustion (more on this later).

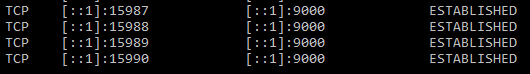

Now here’s what happens when I fire off 15 requests using a single instance of HttpClient (with a max concurrency of four)

It received 15 concurrent requests and only allocated four sockets total. It reused the existing sockets for subsequent requests.

Define the max concurrent requests per server

To set the max concurrent requests per server:

- Create SocketsHttpHandler

- Set SocketsHttpHandler.MaxConnectionsPerServer.

- Pass in the SocketsHttpHandler in the HttpClient constructor.

Here’s an example:

var socketsHttpHandler = new SocketsHttpHandler()

{

MaxConnectionsPerServer = 16

};

var httpClient = new HttpClient(socketsHttpHandler);

Code language: C# (cs)Note: If you’re using .NET Framework, refer to the Setting max concurrency in .NET Framework section below.

Set the max concurrency to whatever makes sense in your situation.

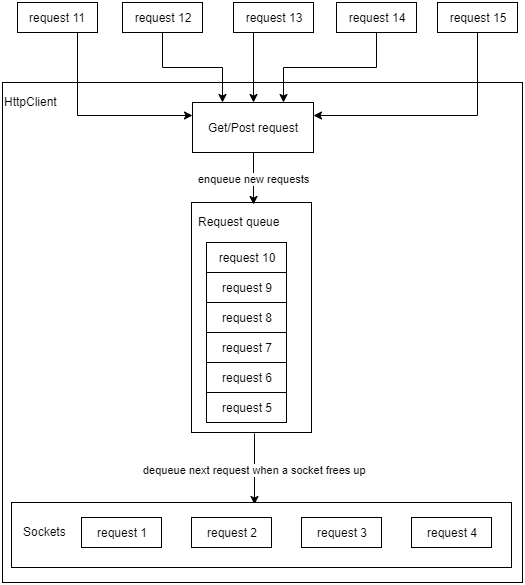

The single HttpClient instance uses the connection limit to determine the max number of sockets it will use concurrently. Think of it as having a request queue. When the number of concurrent requests > max concurrency, the remaining requests wait in a queue until sockets free up.

For example, let’s say you want to fire off 15 requests concurrently (with max concurrency = four). The following diagram shows how the HttpClient will have four sockets open at once, processing a maximum of four requests concurrently. Meanwhile, the remaining 11 requests will queue up, waiting for a socket to free up.

For reference – Setting the max concurrency in .NET Framework

2023-03-10 – Added to distinguish how to set the max concurrency in .NET Core vs .NET Framework.

In .NET Core apps going forward, use SocketsHttpHandler.MaxConnectionsPerServer to set the max concurrency. In .NET Framework, you could set the max concurrency with ServicePointManager, like this:

private void SetMaxConcurrency(string url, int maxConcurrentRequests)

{

ServicePointManager.FindServicePoint(new Uri(url)).ConnectionLimit = maxConcurrentRequests;

}

Code language: C# (cs)If you don’t explicitly set this, then it uses the ServicePointManager.DefaultConnectionLimit. This is 10 for ASP.NET and two for everything else.

Avoid port exhaustion – Don’t use HttpClient as a request queue

In the previous section I explained how the HttpClient has an internal request queue. In this section I’m going to explain why you don’t want to rely on HttpClient’s request queuing.

In the best case scenario, 100% of your requests get processed successfully and quickly. In the real world that never happens. We need to be realistic and deal with the possibility of things going wrong.

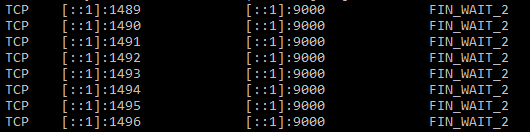

To illustrate the problem, I’m sending 15 concurrent requests, and they will all timeout on purpose. I have a max concurrency of four, so you would expect HttpClient to only open four sockets maximum. But here’s what really happens:

There are more than four sockets open at once, and HttpClient will keep opening new sockets as it processes requests.

In other words, when things are going right, it’ll cap the number of sockets it allocates based on the max concurrency you specified. When things are going wrong, it’ll waste sockets. If you are processing lots of requests, this can quickly snowball out of control and lead to port exhaustion. When there aren’t enough ports available to allocate sockets on, network calls start failing all over the system.

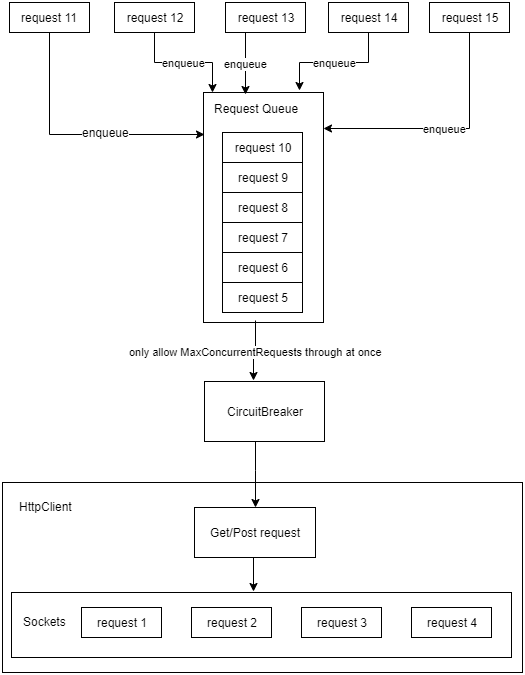

The solution is to not rely on HttpClient as a request queue. Instead, handle request queuing yourself and implement a Circuit Breaker strategy that makes sense in your situation. The following diagram shows this approach in general:

How you implement the request queuing mechanism and circuit breaker will depend on what makes sense for your situation.

Example – making concurrent requests with HttpClient

I have an endpoint at http://localhost:9000/api/getrandomnumber. This returns a randomly generated number. I’m going to use a max concurrency of four, and call this with 15 concurrent requests.

I’ve implemented this using the guidelines explained in this article:

- Use a single instance of HttpClient.

- Set the max concurrency.

- Don’t use HttpClient as a request queue.

Instead of using HttpClient as a request queue, I’m using a semaphore as a request queue. I’m using a simple circuit breaker strategy: when a problem is detected, trip the circuit, and don’t send any more requests to HttpClient. It’s not doing automatic retries, and is not automatically closing the circuit. Remember: you’ll want to use a circuit breaker strategy that makes sense in your situation.

RandomNumberService class

public class RandomNumberService

{

private readonly HttpClient HttpClient;

private readonly string GetRandomNumberUrl;

private SemaphoreSlim semaphore;

private long circuitStatus;

private const long CLOSED = 0;

private const long TRIPPED = 1;

public string UNAVAILABLE = "Unavailable";

public RandomNumberService(string url, int maxConcurrentRequests)

{

GetRandomNumberUrl = url;

var socketsHttpHandler = new SocketsHttpHandler()

{

MaxConnectionsPerServer = maxConcurrentRequests

};

HttpClient = new HttpClient(socketsHttpHandler);

semaphore = new SemaphoreSlim(maxConcurrentRequests);

circuitStatus = CLOSED;

}

public void CloseCircuit()

{

if (Interlocked.CompareExchange(ref circuitStatus, CLOSED, TRIPPED) == TRIPPED)

{

Console.WriteLine("Closed circuit");

}

}

private void TripCircuit(string reason)

{

if (Interlocked.CompareExchange(ref circuitStatus, TRIPPED, CLOSED) == CLOSED)

{

Console.WriteLine($"Tripping circuit because: {reason}");

}

}

private bool IsTripped()

{

return Interlocked.Read(ref circuitStatus) == TRIPPED;

}

public async Task<string> GetRandomNumber()

{

try

{

await semaphore.WaitAsync();

if (IsTripped())

{

return UNAVAILABLE;

}

var response = await HttpClient.GetAsync(GetRandomNumberUrl);

if (response.StatusCode != HttpStatusCode.OK)

{

TripCircuit(reason: $"Status not OK. Status={response.StatusCode}");

return UNAVAILABLE;

}

return await response.Content.ReadAsStringAsync();

}

catch (Exception ex) when (ex is OperationCanceledException || ex is TaskCanceledException)

{

Console.WriteLine("Timed out");

TripCircuit(reason: $"Timed out");

return UNAVAILABLE;

}

finally

{

semaphore.Release();

}

}

}

Code language: C# (cs)Notes:

- 2021-08-31 – Updated to use the correct circuit terminology (“closed” instead of “open”).

- 2023-03-10 – Updated to set the max concurrency with SocketsHttpHandler.MaxConnectionsPerServer (the preferred way to do it in .NET Core).

Sending 15 concurrent requests

var service = new RandomNumberService(url: "http://localhost:9000", maxConcurrentRequests: 4);

for (int i = 0; i < 15; i++)

{

Task.Run(async () =>

{

Console.WriteLine($"Requesting random number ");

Console.WriteLine(await service.GetRandomNumber());

});

}

Code language: C# (cs)Results

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Requesting random number

Timed out

Timed out

Timed out

Tripping circuit because: Timed out

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Unavailable

Timed out

UnavailableCode language: plaintext (plaintext)15 requests are sent concurrently. Only four are actually sent to HttpClient at once. The remaining 11 await the semaphore.

All four that are being processed by the HttpClient time out. All four of them try to mark the circuit as tripped (only one reports that it tripped it).

One by one, the semaphore lets the next requests through. Since the circuit is tripped, they simply return “Unavailable” without even attempting to go through the HttpClient.

Only use DefaultRequestHeaders for headers that don’t change

Updated article (9/30/21) with this new section.

HttpClient.DefaultRequestHeaders isn’t thread-safe. It should only be used for headers that don’t change. You can set these when initializing the HttpClient instance.

If you have headers that change, set the header per request instead by using HttpRequestMessage and SendAsync(), like this:

using (var request = new HttpRequestMessage(HttpMethod.Get, GetRandomNumberUrl))

{

request.Headers.Authorization = new AuthenticationHeaderValue("Bearer", Token);

var response = await HttpClient.SendAsync(request);

response.EnsureSuccessStatusCode();

return await response.Content.ReadAsStringAsync();

}

Code language: C# (cs)

Alas, this approach does not work in UWP apps. Upon the second request, the system throws an exception:

“A concurrent or interleaved operation changed the state of the object”,

Hi Mike,

Are you referring to using Windows.Web.Http.HttpClient in UWP? This article is referring to System.Net.Http.HttpClient. Please clarify.

Is the circuit breaker really necessary, or just an efficiency thing? I can see it being useful if the server we query goes down, then we can just report it being down for a given time span. Is it significant beyond that?

Hi Shawn,

It’s not a question of if the endpoint will go down, but when. Because it’s an inevitability, it’s important to make sure your code can handle this scenario gracefully by using circuit breaker logic.

Let’s say your code doesn’t have circuit breaker logic and the endpoint goes down for awhile. Your code will keep sending requests. Each request will be sent on a new socket, using up a port, and the socket will linger around for a few minutes after the request has failed. Eventually so many ports will be used up that it leads to “port exhaustion,” which blocks ALL requests in the entire system (because they can’t allocate a port to send on).

The circuit breaker prevents this low probability, high impact error scenario, so it makes sense to do it.

Just to be clear, in this article, I showed a very basic circuit breaker approach (with the intention of getting people to think about how they want to do their own circuit breaker). You’re right though, a “time-based” circuit breaker approach is a good way to go.

Can we use Polly circuit breaker instead of creating one on our own?

Yes, you can use Polly instead of writing your own circuit breaker logic.

Is the code example in a repo o somewhere else for consultation?

Hi Mike,

The code can be found in the following repository: https://github.com/makolyte/concurrent-requests-with-httpclient

This contains two projects:

1. /client/ – This is a console app that runs the example code shown in this article. It sends concurrent requests to GET /RandomNumber/

2. /server/ – This is an ASP.NET Web API service stub. This has the GET /RandomNumber/ endpoint.

Thanks so much 🙂

Great post, very helpful. One nit… technically, an “Open” circuit is the same as a Tripped circuit… a “Closed” circuit is one through which electricity flows. Could be a little confusing for C# developers that are also DIY weekend warriors. 🙂

Great catch. I updated the code and wording to use the correct circuit terminology (“closed” instead of “open” when it’s allowing requests through). Thanks for pointing that out Vince!

Hey Mak, I don’t know if I would be receiving a response for this, but when we have a single httpClient instance for handling multiple concurrent requests, the problem I see is with the headers. since the DefaultRequestHeaders is not thread-safe in nature and I need to for example pass a bearer-token or other such headers with dynamic values, how do we take care of achieving the same??

Very good question. I didn’t think about covering that in this article, but now I realize that I should.

To answer your question, you’ll want to set the headers per request. To do that with HttpClient, you have to create an HttpRequestMessage, set the headers, and use HttpClient.SendAsync(). Unfortunately you can’t just use GetAsync() / PostAsync(), so it’s a little harder to use. I’ll update the article with a code example showing how to do that.

Hi,

I’ve got a scenario where I set DefaultConnectionLimit to 10.

99% of the time calls are being executed in series, but every so often concurrent calls can happen.

But when this does happen the additional connections which get created never close. They sit in Close_Wait state.

If there is any an idle period of like 2 minutes, then these connections close. But while there is activity on the httpclient I’m not able to get the additional connections to close.

Do you have any advise for me here perhaps ?

Many thanks

Note: .NET Framework 4.7.2

I re-ran the tests using .NET 4.7.2. It took much longer for the CLOSE_WAIT sockets to close. It took > 5 minutes, whereas with .NET Core it took < 1 minute.

You can control how long a socket will stay open by setting ConnectionLeaseTimeout, like this:

ServicePointManager.FindServicePoint(url).ConnectionLeaseTimeout = 60000; //1 minute

In this example, it's set to 1 minute (in milliseconds). After 1 minute, sockets are released whether they are active (just used) or idling. It gets rid of the CLOSE_WAIT sockets quickly.

Because this releases active sockets, it's a tradeoff between maximizing throughput and minimizing wasted sockets. In scenarios where you're sending tons of concurrent requests all the time, it would impact performance, but it probably wouldn't be noticeable.

(summary of email conversation about this)

Hi Mak,

I’m really enjoying your content and learning a great deal. Question, is there a replacement using HttpClient for ServicePointManager.FindServicePoint. ServicePointManager.FindServicePoint is marked as being obsolete. Thanks in advance.

Thanks Dan, I’m happy to hear that!

To answer your question, you can use SocketsHttpHandler instead of ServicePointManager. See this updated section Define the max concurrent requests per server (updated 2023-03-10 to better answer this comment and the one after it).

Dear Mak, thanks for the insightful read!

I am trying to understand if a .NETCore3.1 console app is really sending off the desired amount of parallel reqs, in my scenario 16, and it seems that ServicePointManager has no effect to this, I tried changing the ConnectionLimit (from default 2 to eg. 16), but maybe I should opt to changing other options?

Also, seems it is not in use for .NETCore per answers and comments: pointing to https://learn.microsoft.com/en-us/dotnet/api/system.net.http.httpclienthandler.maxconnectionsperserver?view=netcore-2.0#remarks which has int’s max value set as default.

Care to comment please?

Hi Vedran,

In .NET Core, pass in a SocketsHttpHandler and set MaxConnectionsPerServer.

var socketsHttpHandler = new SocketsHttpHandler(){

MaxConnectionsPerServer = 16

};

var httpClient = new HttpClient(socketsHttpHandler);

In my testing, ServicePointManager always works fine, even all the way up to .NET 7. I originally wrote this article after dealing with a massive performance problem in a .NET Framework service. Since then, Microsoft has released several iterations of .NET Core and has changed the networking code quite a bit. One of the major changes is they made ServicePointManager obsolete (which is weird, because it definitely still works fine, at least in some cases). It’s now a good idea to use SocketsHttpHandler instead of ServicePointManager.

Thank you for pointing this out. I will update the article accordingly.

Will your code work for asp.net mvc web application?

Yup. You can use HttpClient as shown in any type of .NET app.